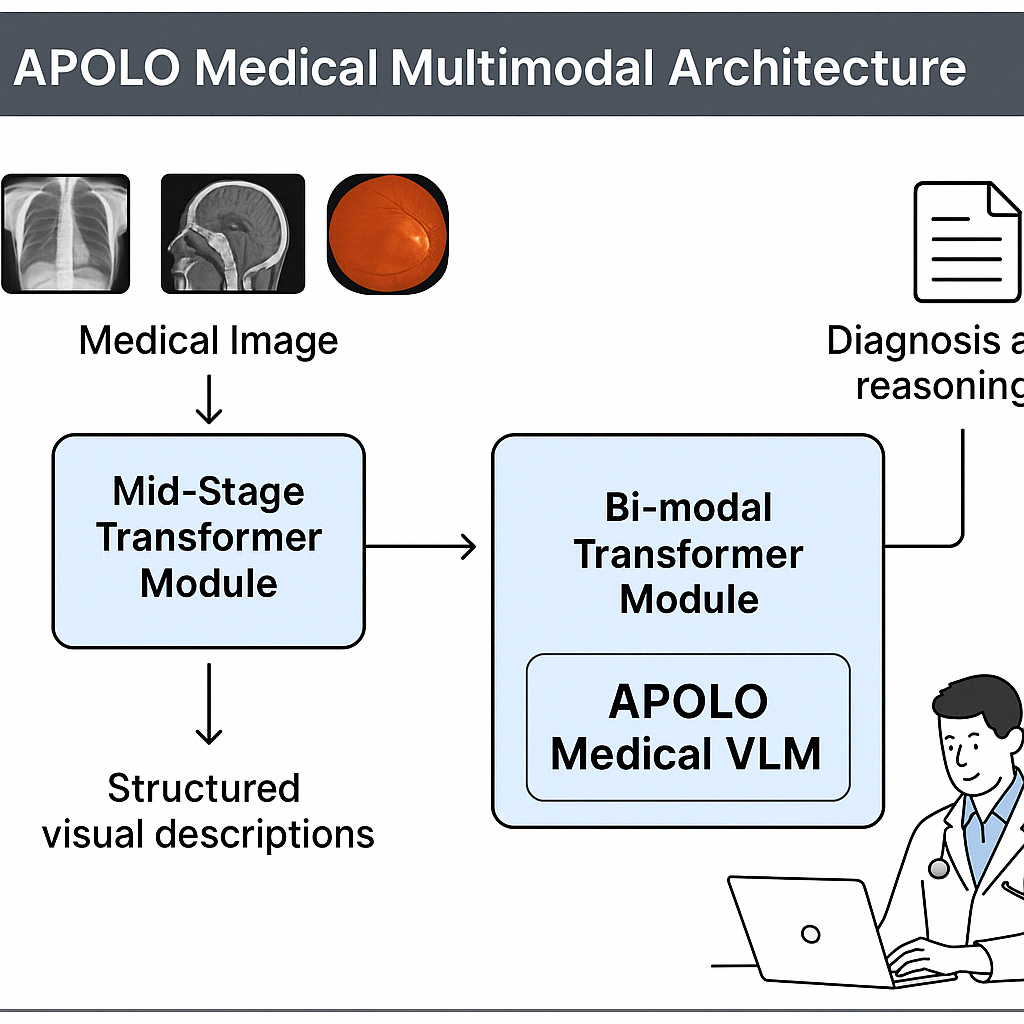

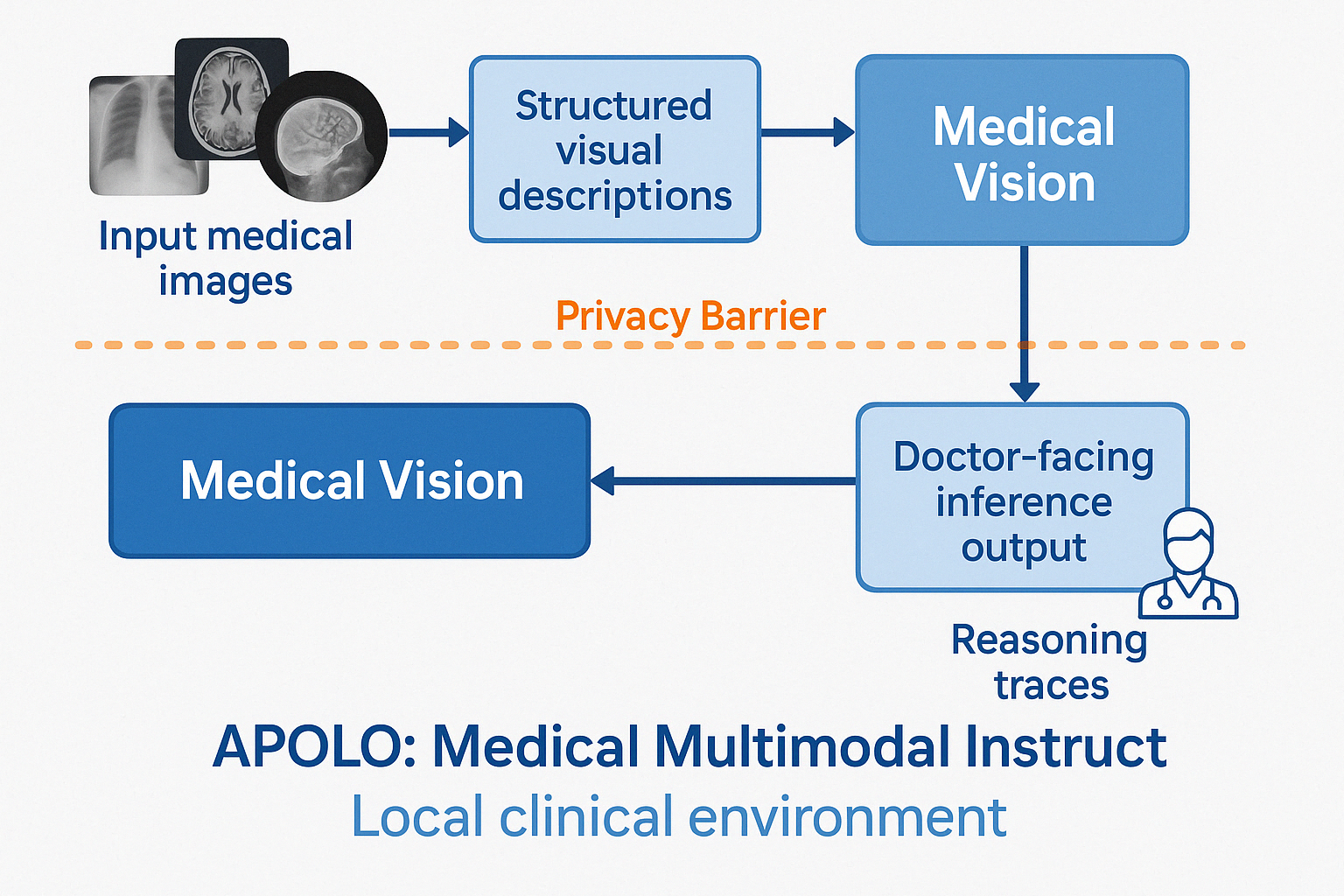

Dual-Level Explainable Medical Image Analysis

APOLO is a medical AI model with dual-level explainability for privacy-preserving medical image analysis. The project integrates DeepSeek-VL2 with LoRA fine-tuning to create a powerful vision-language model specifically designed for medical applications.